Manual Evaluations

Running Manual Evaluations

For manual evaluations, the application variant will be run against the evaluation dataset and then humans from your team will annotate the results based on the rubric.

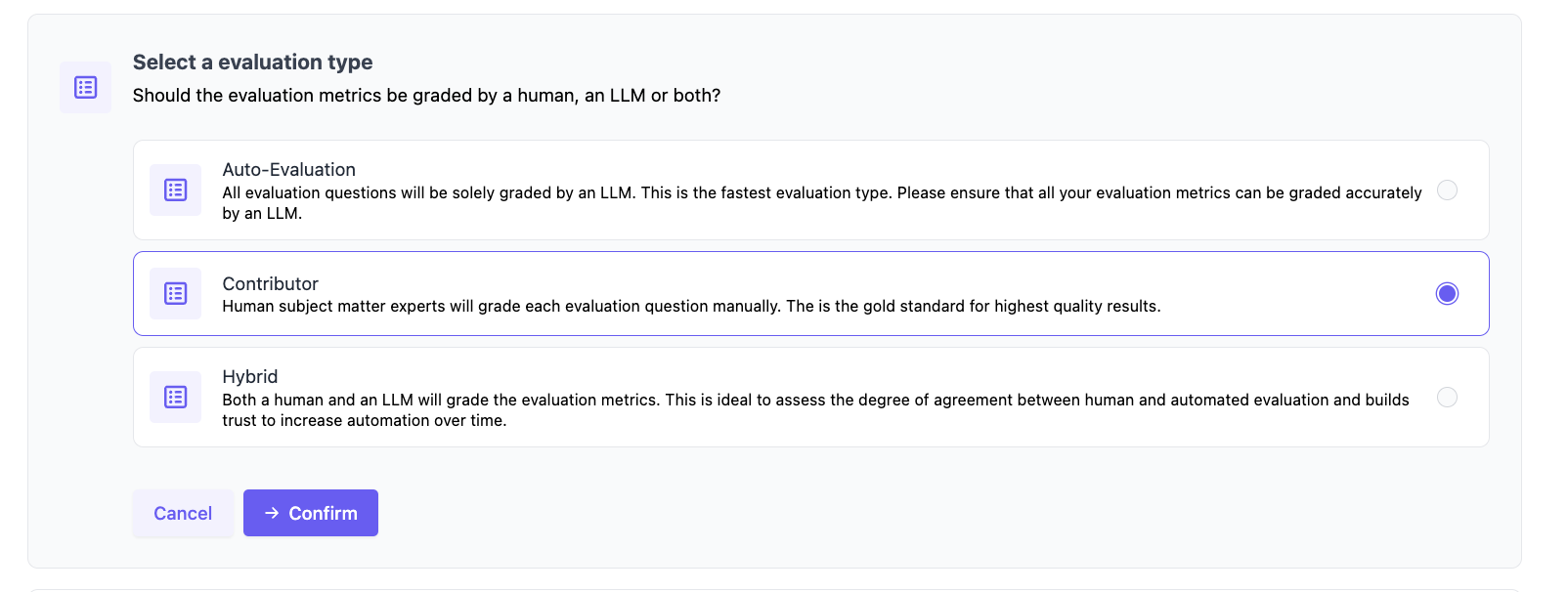

When configuring the evaluation run on the variant, you can either select Contributor or Hybrid to enable a manual evaluation on the run (hybrid evaluations will run both an auto-evaluation and enable humans to annotate).

Annotating Evaluations

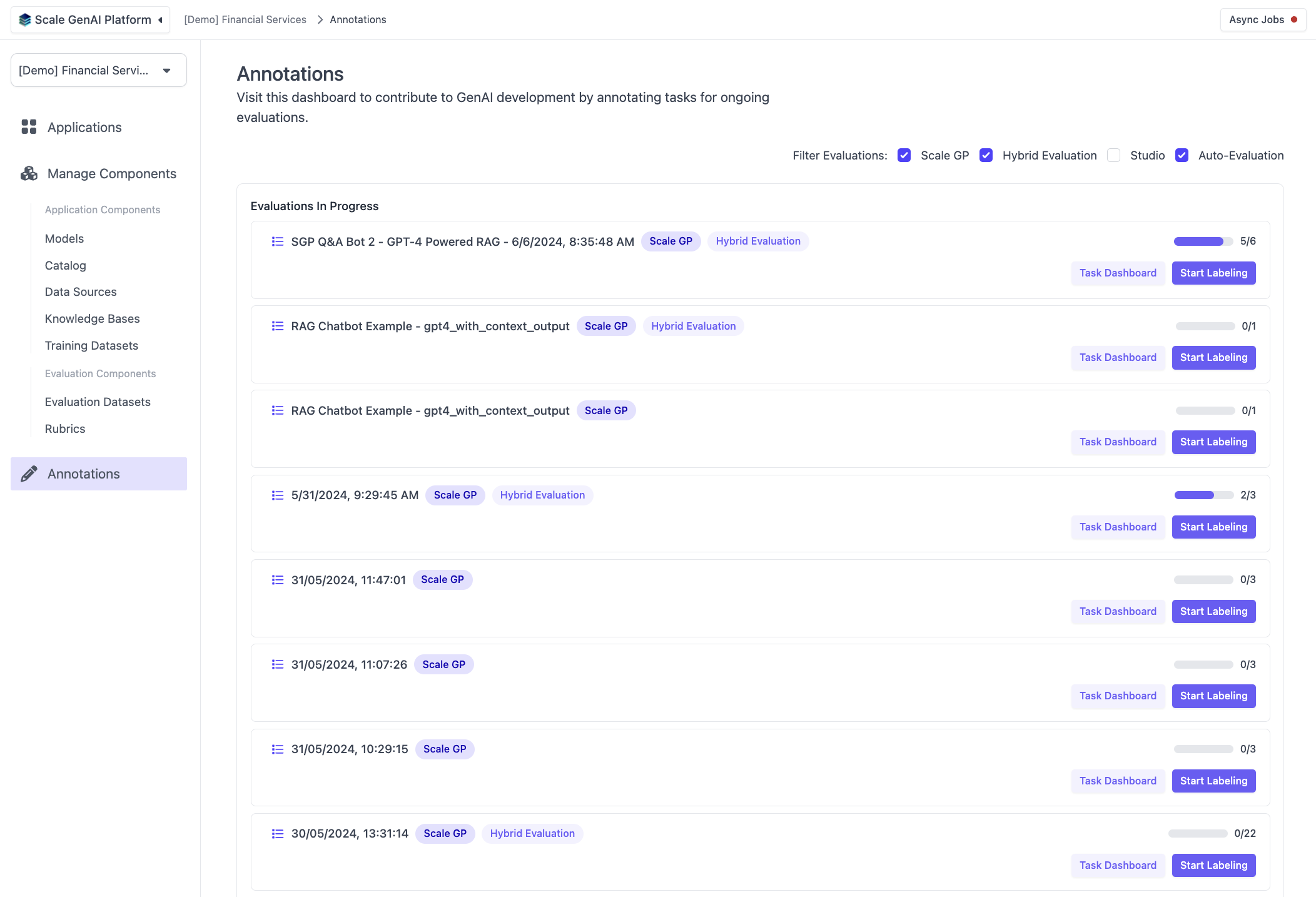

After a manual evaluation has been kicked off, you (or your contributors) can begin labeling tasks by navigating to the Annotation tab in the platform.

Task Dashboard

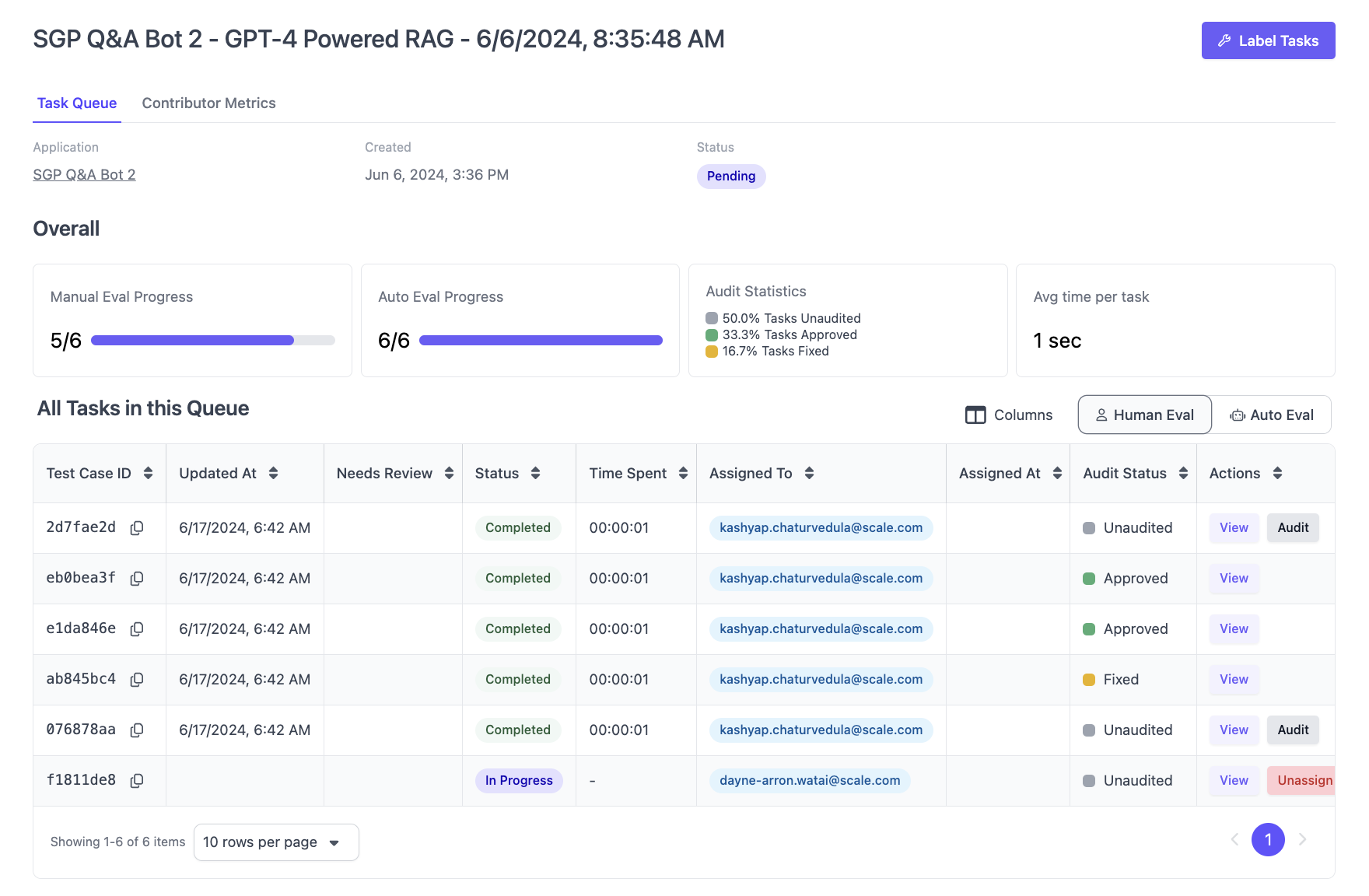

The Task Dashboard shows the queue of test cases in an evaluation run. From here, you can view who each test case was assigned to along with the status of the task. You can also audit the tasks here.

Annotator View

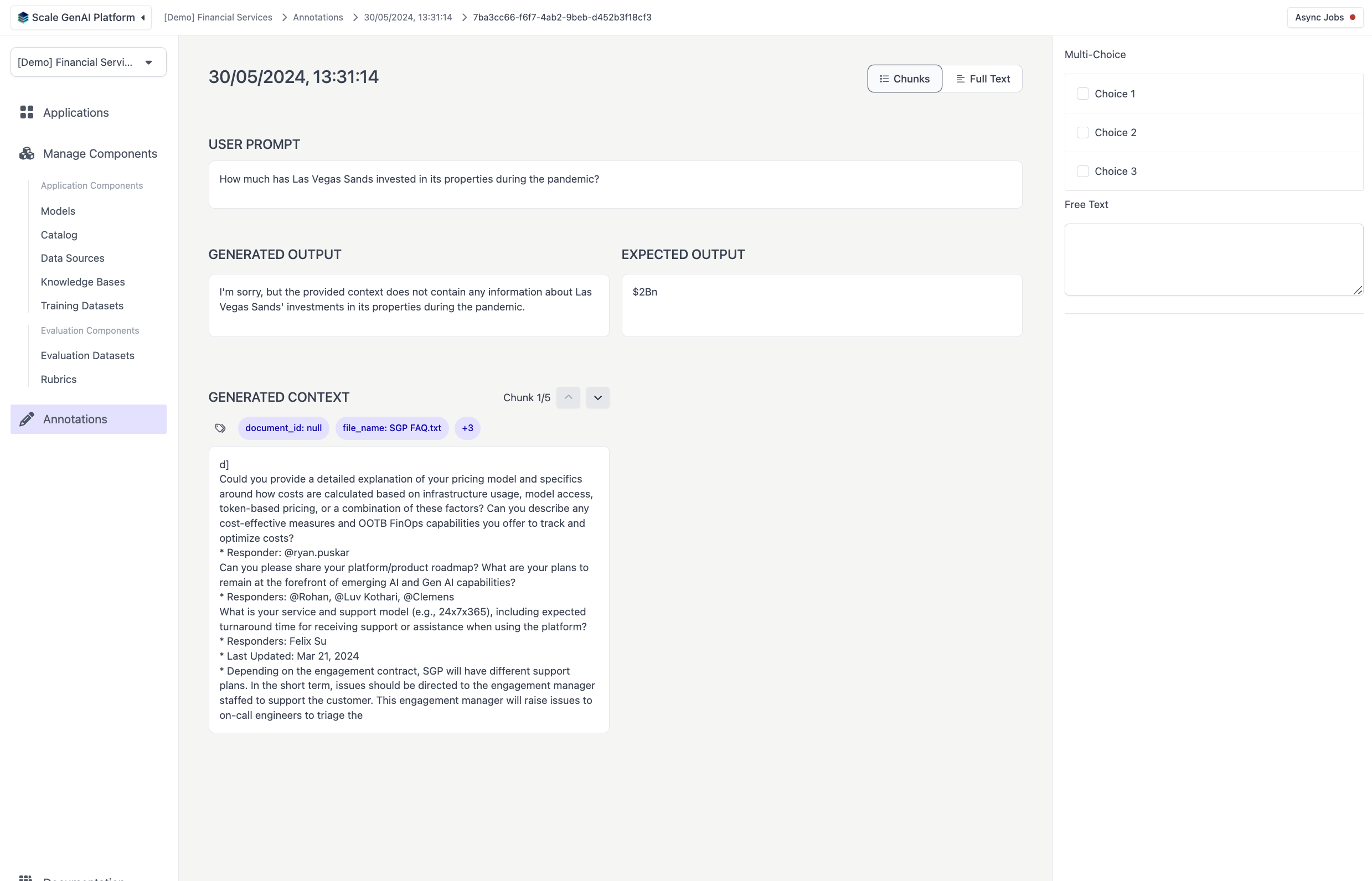

As an annotator, you can click on Start Labeling from Annotations view in order to begin labeling evaluation test cases.

Updated over 1 year ago