External Applications

Integrate off-platform applications with SGP for evaluation

Getting Started

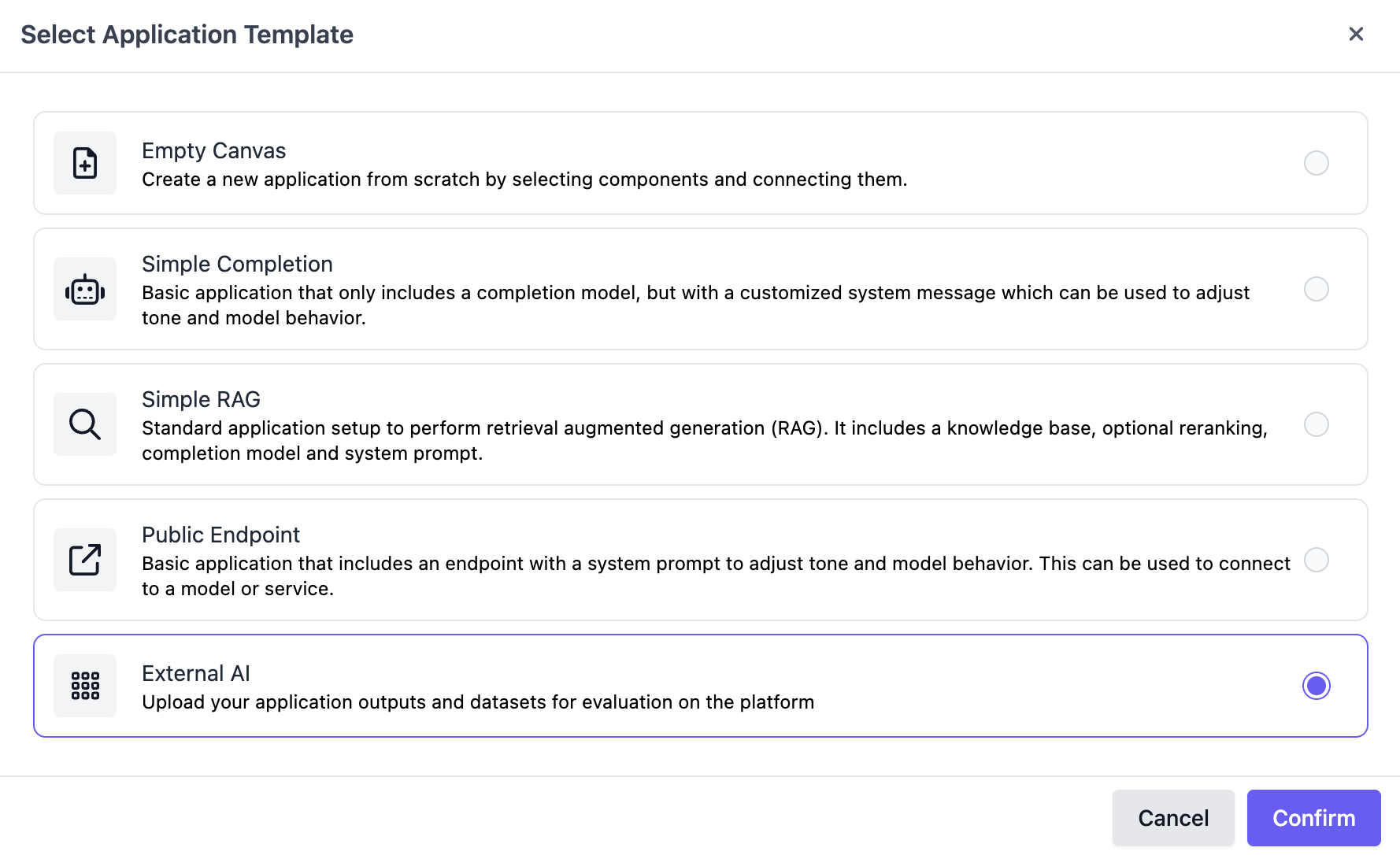

External applications provide a bridge between SGP and AI applications that exist off-platform. To get started, navigate to the "Applications" page on the SGP dashboard, click Create a new Application, and select External AI as the application template.

Integration

You can use the new SGP Python client to bridge your external AI to SGP. Doing this will allow you to run test cases from an Evaluation Dataset on your SGP account on your external AI and, subsequently, will enable you to run evaluations and generate a Scale Report.

Define an interface

In order to integrate your external AI with SGP, you'll have to create an interface to run your application locally. This interface is a Python callable that takes a single prompt and returns your external AI application's formatted output.

from scale_gp.lib.external_applications import ExternalApplicationOutputCompletion

def my_app_interface(prompt: str):

output = ... # Call your application with the `prompt` here

return ExternalApplicationOutputCompletion(

generation_output=output,

)

For example, an integration with a LangChain model might look like this:

from langchain_core.messages import HumanMessage

from .my_langchain_model import model

def my_langchain_app_interface(prompt: str):

response = model.invoke([HumanMessage(content=prompt)])

return ExternalApplicationOutputCompletion(

generation_output=response.content,

)

Custom Metrics

Optionally, you can include custom client-side metrics to display with your outputs. These values will surface on the details page for any evaluation run you create against your uploaded outputs.

from scale_gp.lib.external_applications import ExternalApplicationOutputCompletion

from scale_gp.types.evaluation_datasets.test_case import TestCase

def my_app_interface(

prompt: str,

test_case: TestCase, # Include a second parameter to access the full test case

):

output = ...

return ExternalApplicationOutputCompletion(

generation_output=output,

metrics={

"completion_tokens": get_token_count(output),

"meteor": compute_meteor_score(output, test_case.test_case_data.expected_output),

}

)

Advanced: Other Output Types

If your application uses RAG (or any other methodology) to generate additional context to inject into an LLM prompt, you can use one of our additional output types to include the context with the text response.

Context - String

You can include additional context as a single piece of text using ExternalApplicationOutputContextString

from scale_gp.lib.external_applications import ExternalApplicationOutputContextString

from .my_rag_pipeline import pipeline

def my_rag_app_interface(prompt: str):

response = pipeline.invoke(prompt)

return ExternalApplicationOutputContextString(

generation_output=response.message,

info=response.context,

)

Context - Chunks

If your application retrieves a list of documents to use a additional context, you can use ExternalApplicationOutputContextChunks to include each piece of text as a separate Chunk

from scale_gp.lib.external_applications import Chunk, ExternalApplicationOutputContextChunks

from .my_rag_pipeline import pipeline

def my_rag_app_interface(prompt: str):

response = pipeline.invoke(prompt)

return ExternalApplicationOutputContextString(

generation_output=response.message,

chunks=[

Chunk(text=chunk.context, metadata={...})

for chunk in response.context

]

)

Generate Outputs

Now that you've defined an interface for your external AI application, you can generate outputs for an evaluation dataset to use for a custom evaluation or to generate Scale Report. First, initialize an SGPClient with your API key, and an ExternalApplication with your external application variant ID and the interface you defined.

from scale_gp import SGPClient

from scale_gp.lib.external_applications import ExternalApplication

client = SGPClient(api_key=...)

external_application = ExternalApplication(client).initialize(

application_variant_id=...,

application=my_app_interface,

)

Then, find or create an evaluation dataset on the SGP dashboard, and copy the ID and latest version number to generate outputs for its test cases with your external application.

external_application.generate_outputs(

evaluation_dataset_id=...,

evaluation_dataset_version=...,

)

Uploading Precomputed Outputs

Optionally, you can bypass defining an ExternalApplication interface if you've already generated outputs for your evaluation dataset outside of the SGP SDK by creating a mapping of test case IDs to outputs, and using the batch upload function. This is what the ExternalApplication library class uses under the hood.

shared_params = {

"account_id": ...,

"application_variant_id": ...,

"evaluation_dataset_version_num": ...,

}

my_outputs: List[Tuple[str, str, List[str]]] = [

("<SOME_TEST_CASE_ID_1>", "My example output 1"),

("<SOME_TEST_CASE_ID_2>", "My example output 2"),

...

] # Create a list of tuples here with the test case ID and output

client.application_test_case_outputs.batch(

items=[

{

**shared_params,

"test_case_id": test_case_id,

"output": {

"generation_output": output,

},

}

for test_case_id, output in my_outputs

]

)

You can also include context chunks used by your application for a given output:

shared_params = {

"account_id": ...,

"application_variant_id": ...,

"evaluation_dataset_version_num": ...,

}

my_outputs: List[Tuple[str, str, List[str]]] = [

("<SOME_TEST_CASE_ID>", "My example output", ["Text from chunk 1", "Text from chunk 2"])

] # Create a list of tuples here with the test case ID, output, and context chunks

client.application_test_case_outputs.batch(

items=[

{

**shared_params,

"test_case_id": test_case_id,

"output": {

"generation_output": output,

"generation_extra_info": {

"schema_type": "CHUNKS",

"chunks": [

{

"text": text,

"metadata": {

... # Include anything (or nothing)

},

}

for text in chunks

],

},

},

}

for test_case_id, output, chunks in my_outputs

]

)

Upload interactions to SGP

If you wish to log interactions from your external application to utilize the SGP features like evaluations and application monitoring, you can do so by using the interactions.create method. If you have additional metadata emitted by the internal building blocks of your application (like a reranking or a completion component), you can attach them to the interaction as trace spans:

from scale_gp import SGPClient

from scale_gp.types.interaction_create_params import Input, Output, TraceSpan

client = SGPClient(account_id=..., api_key=...)

client.interactions.create(

application_variant_id=...,

input=Input(query="What is the speed of light in a vacuum?"),

output=Output(response="The speed of light in a vacuum is approximately 299,792 (km/s)."),

start_timestamp="2024-08-22T14:22:27.502Z",

duration_ms=3200,

operation_metadata={"user_id": "user-123"},

trace_spans=[

TraceSpan(

node_id="RETRIEVAL-NODE-1",

operation_type="RETRIEVAL", # Supported values are: COMPLETION, RERANKING, RETRIEVAL, CUSTOM

start_timestamp="2024-08-22T14:22:28.502Z",

duration_ms=2200

),

TraceSpan(

node_id="COMPLETION-NODE-1",

operation_type="COMPLETION",

start_timestamp="2024-08-22T14:22:30.502Z",

duration_ms=1000,

operation_metadata={"tokens_used": 420}

)

],

)

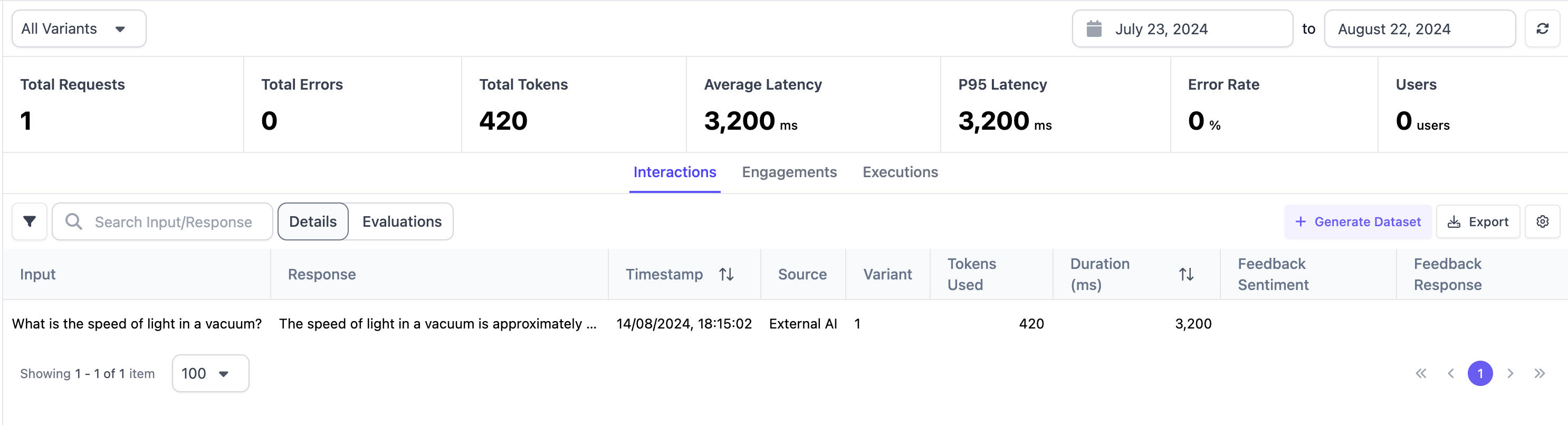

The registered interactions will then show up on the Application Monitoring page that you can reach from the Applications page by clicking on your External Application instance, and selecting Monitoring Dashboard. From this page, you chan check out the details of your interactions, like the request latency, or the error rate. If you click on an interaction, you can also check out additional details about it, as well as the information emitted by the trace spans.

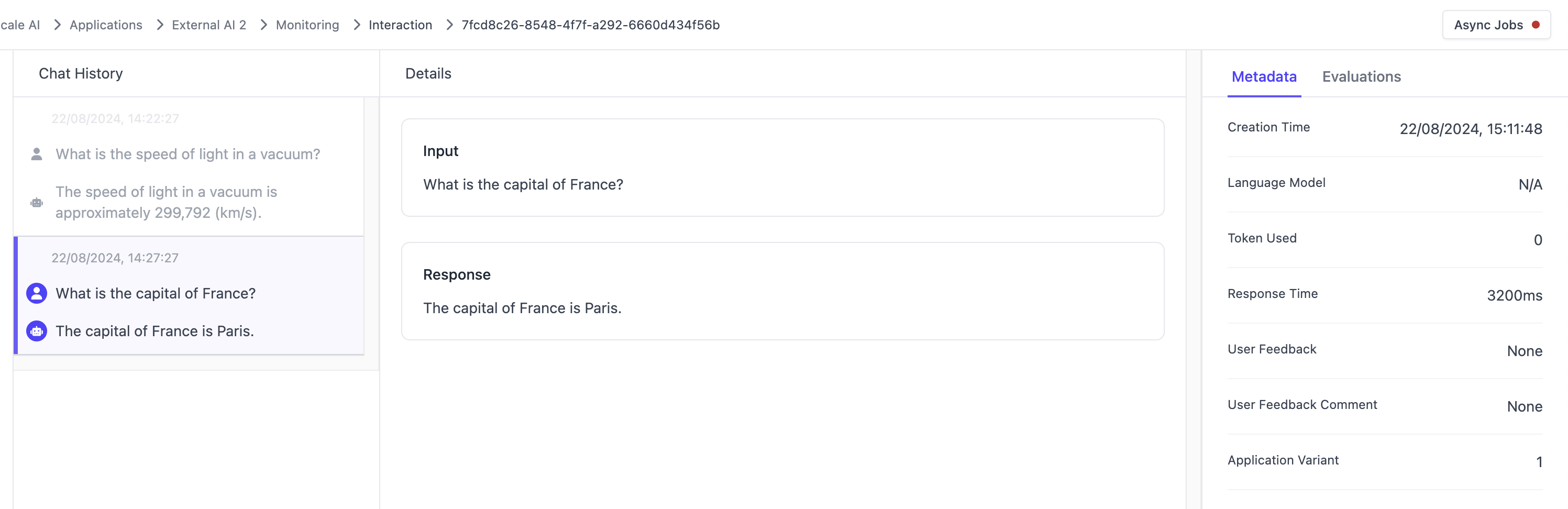

Creating threads for interactions

If your application supports multi-turn interactions, you might want to group certain interactions together, that are part of the same conversation. You can do this by assigning a UUID for the interaction, and then using the same UUID for any follow-up interaction.

from scale_gp import SGPClient

from scale_gp.types.interaction_create_params import Input, Output

import uuid

client = SGPClient(account_id=..., api_key=...)

application_variant_id = ...

thread_id = str(uuid.uuid4())

client.interactions.create(

application_variant_id=application_variant_id,

thread_id=thread_id,

input=Input(query="What is the speed of light in a vacuum?"),

output=Output(response="The speed of light in a vacuum is approximately 299,792 (km/s)."),

start_timestamp="2024-08-22T14:22:27.502Z",

duration_ms=3200,

)

client.interactions.create(

application_variant_id=application_variant_id,

thread_id=thread_id,

input=Input(query="What is the capital of France?"),

output=Output(response="The capital of France is Paris."),

start_timestamp="2024-08-22T14:27:27.502Z",

duration_ms=3200,

)

This will group them together in the interaction details page in the following way:

Updated over 1 year ago