Auto Evaluations

For auto evaluations, the application variant will be run against the evaluation dataset and then an LLM will annotate the results based on the rubric.

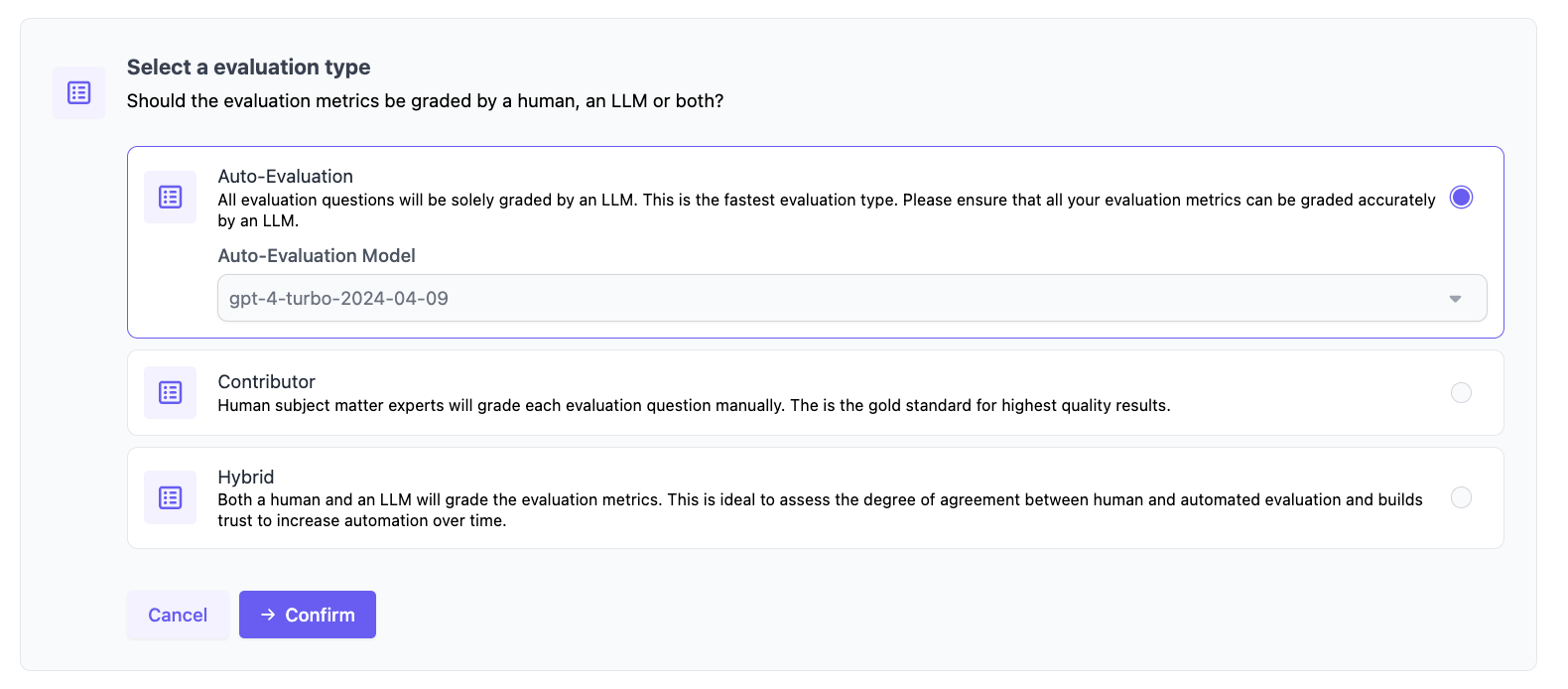

When configuring the evaluation run on the variant, you can either select Auto-Evaluation or Hybrid to enable an auto evaluation on the run (hybrid evaluations will run both an auto-evaluation and enable humans to annotate).

This will run as an asynchronous job in the background. When the evaluation is complete, you can view the updated status on the application page.

Updated over 1 year ago